Multimodal AI

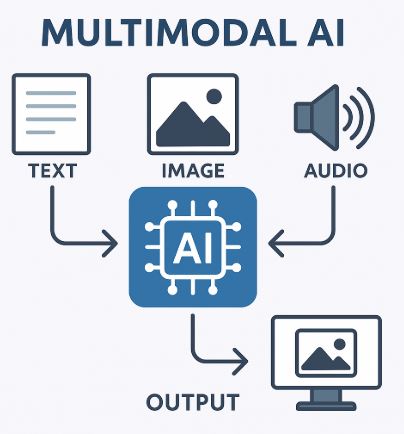

A clean 2D digital infographic visually demonstrating how Multimodal AI processes different data types—text, images, and audio—into a single AI model. Ideal for educational blog posts.

Welcome to the future of artificial intelligence. Multimodal AI is changing the way machines understand the world by combining text, images, and audio into one powerful system. In this article, we’ll explore how it works, why it matters, and how it’s being used today.

Table of Contents

- What is Multimodal AI?

- How Multimodal AI Works

- Real-World Applications of Multimodal AI

- Popular Tools and Models

- Why It Matters for the Future

Multimodal AI: Integrating Visual, Audio, and Text Inputs in One Model

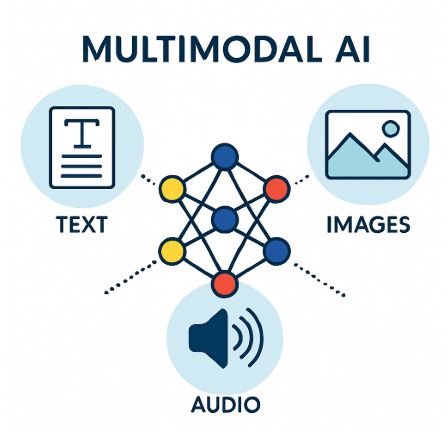

This digital infographic showcases the concept of multimodal learning by connecting icons of audio, visual, and language inputs, centered around an AI brain graphic.

1. What is Multimodal AI?

Multimodal AI refers to systems that can process and understand multiple types of data — like text, images, and sound — all at once. Unlike traditional AI, which usually focuses on a single type of input, multimodal AI creates a more human-like understanding of information.

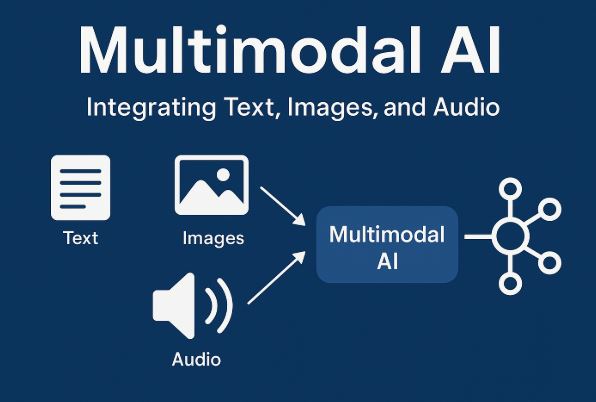

A 2D illustration breaking down how multimodal data flows through an AI pipeline—highlighting features like speech recognition, image processing, and text analysis working together.

2. How Multimodal AI Works

These systems use deep learning models that combine data from different sources. For example, a model might analyze a photo (image), its caption (text), and the person speaking in a video (audio). This combined analysis allows for better results in tasks like translation, search, or content creation.

Technologies like cross-attention mechanisms and multimodal embeddings help connect the dots between data types. The result is smarter, more flexible AI.

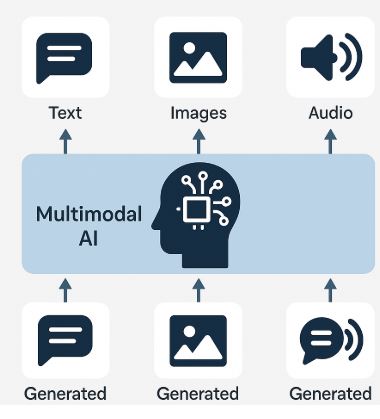

This vibrant infographic visually represents a Multimodal AI system with neural networks merging sound waves, pictures, and text snippets, symbolizing unified cognitive processing.

3. Real-World Applications of Multimodal AI

Multimodal AI is being used across industries:

- Healthcare: AI can analyze X-rays and doctor notes together.

- Education: Learning platforms use speech and visual content to enhance understanding.

- Social Media: Tools detect harmful content across video, voice, and comments.

- Retail: Visual search lets you shop by uploading an image.

It’s also behind features like image captioning, voice-to-text search, and real-time translation.

A polished educational infographic that maps the applications of Multimodal AI across sectors like healthcare, entertainment, and e-commerce, emphasizing its real-world use cases.

4. Popular Tools and Models

Many big tech players are leading the way:

- OpenAI GPT-4V: Understands images along with text prompts.

- Google Gemini: Trained to process audio, video, and language.

- Meta Llama 4: Known for its advanced content generation.

5. Why It Matters for the Future

Multimodal AI is pushing the boundaries of how we interact with machines. It’s a leap toward AI systems that can see, hear, and read — just like humans. That means:

- Better voice assistants

- Smarter search engines

- More interactive learning

- Creative content generation

As more companies adopt this tech, it’ll become a regular part of our digital lives